Next to using a directional antenna, one of the simpler ways to determine the direction of a received signal is to use what is often referred to as the "TDOA" system which stands for Timed Direction Of Arrival.

One method of implementation involves the use of two separate antennas, switched at an audible rate, and connected to a narrowband FM receiver. In its simplest form the antenna switch signal could be produced by anything from a 555 timer to an oscillator made from logic gates to one made using an op-amp: All that is necessary is that the duty cycle of the driving (square) waveform me "near-ish" 50% (+/- 30% is probably ok...) and be of sufficient level to adequately drive the switching diodes on the antenna.

See the diagram below for an explanation of how this system works:

In short, if both antennas - which are typically half-wave dipoles - are exactly the same distance from the signal source, the RF waveform on each of the two antennas will have arrived at exactly the same time. If we electronically switch between the two antennas, nothing will happen because both signals are identical.

If one of the two dipoles in our DF antenna is closer to the transmitter than the other, switching the between the two antennas will cause the receiver to see a "jump" in the RF waveform: Switching from, say, A to B will cause it to jump forward while switching from B to A will cause it to switch backward.

The switching, causing the RF waveform to "jump", is seen by the FM receiver as phase shift in the received signal - and being an FM receiver, it detects this as a "glitch" in the audio as depicted in Figure 2:

Because the discontinuity in the RF waveform caused by the antenna switch is abrupt, the "glitch" in the audio waveform is transient and occurring only when the antenna is switched. You might notice something else in Figure 2 as well: If we assume that a the first glitch is from switching from antenna "A" to antenna "B" and that it causes the glitch to be positive-going, the switch from antenna "B" back to antenna "A" is going to be negative-going. Ideally, the glitches are going to be equal and opposite but sometimes - such as the case in Figure 2, they are not exactly the same and this is usually due to multipath distortion at the DF antenna array.

At this point, several things may have already occurred to you:

If the antennas are switched at an audible rate, these glitches will be clearly audible as a tone superimposed on the received signal. While it is not possible to determine the phase relationships of these glitches by ear to determine whether the transmitter is to the left or right of our signal, by sweeping it back and forth and mentally noting where the "null"(e.g. the point at which the tone disappears) is, we can infer the direction of the transmitter by doing so - and that is exactly how the simplest of these TDOA systems work.

For an example of a simple TDOA system using a 555 timer, see the following web page:

WB2HOL 555 Time Difference-of-Arrival RDF - link

This circuit is about as simple as it gets: A 555 timer that generates a square-ish wave. It is up to the user to move the antenna back and forth, note the null and infer from that the direction of the signal.

It should be noted that in this case the transmitter could be behind the user, but with a bit of skill and practice one can resolve this 180 degree ambiguity - particularly if one is fairly close to the transmitter - by noting how the apparent bearing changes with respect to the relative locations of the user and the transmitter.

Detecting the glitches electronically:

To electronically determine if the signal is to the left or right of our position we must be able to determine if the glitch that occurs when switching from on antenna to the other is positive-going or negative-going and to do this, we need some simple circuity and one way to do this is to include a "window" detector - that is, a detector that "looks" at the receive audio for a brief instant, just after the antenna switch occurs and here are two circuits that do just that:

WB2HOL's Simple Time Difference-of-Arrival RDF - link

and

The WA7ARK TDOA units - link (Some of the circuits on this page are described below. )

In looking at the WA7ARK pages, we find two units that indicate left/right in different ways:

Finding the glitches:

At this point in the discussion I would like to redirect your attention back to Figure 2, above. You'll notice that these glitches are really quite brief: They don't last very long at all - and most of the space between glitches is empty - at least if there is no other audio on the transmitted signal.

What about if the signal being received is heavily modulated with voice or noise? That glitch can be easily lost amongst the clutter - but we have advantage: We can know precisely when that glitch is going to occur and look for it only then, ignoring everything else. In selectively looking for that glitch, much of the effect of modulation on that signal that would serve to "dilute" the signal that we want is reduced and this method is used in the "Metered" version of the WA7ARK circuit, reproduced below:

Because we are looking at only the 20% of the time during which a glitch is coming in, we not only better-reject other audio that might be being transmitted on that signal, but by virtue of some filtering provided by capacitors C2, C3 and resistor R3, we are averaging out the noise and other modulation as well, further improving our effective sensitivity and reducing our susceptibility to effects of noise and modulation on our received signal!

In actual use, one would determine the optimal taps for "X" and "Y" on U2 in the diagram above - either with an oscilloscope, or experimentally by adjusting the taps using a clean tone - no modulation received using an antenna like that described below for the highest meter indication. For calibration, one would simply set the volume on the receiver to cause full-scale deflection when the antenna was pointing "away"(e.g. 90 degrees rotated from the two elements being broadside to the transmitted signal). If necessary, you may make R4 variable, placing a 1k resistor in series with a 10k-25k potentiometer.

The antenna:

Up to this point the antenna has been mentioned only in passing. The simplest antenna - and one that works well for practically any of the simple TDOA systems (of the "left/right" variety) you are likely to find - is depicted below:

While the antenna depicted in Figure 4looks like a 2-element Yagi, it is not. What's more, it is important to realize that while you would line up the elements of a Yagi to point it at the signal being sought, this antenna - when "pointed" toward the transmitter - will have its elements oriented broadside to the transmitter. In other words, if you are facing the transmitter and you are holding the antenna centered in front of you, one of its elements will be to your right and the other will be at the same distance, but on your left.

A few notes about construction:

If you look at Figure 3 you will see J2, which is connected to the DF antenna and J3, which is connected to the receiver and separating the two is C7, a 47pF capacitor: C7 is too small to effectively pass our audio-frequency antenna switching signal and is thus able to prevent it from entering the front end of our receiver.

Our switching signal - a square wave - is coupled to the antenna via C6 and this capacitor is large enough that it allows the square wave to pass, but since it is AC coupled, it causes our positive-going square wave from U3D to become bipolar, centered about zero going both positive and negative with respect to ground. R9 is used not only to limit the level of the square wave being fed to the diodes, but it also isolates the RF signal present at J2/C7 from the rest of the circuit.

The now-bipolar square wave travels down the coax to our antenna along with the RF and when it is positive-going, D1 (in Figure 4) conducts and reverse-biases D2, shutting it off, but when it is negative-going, D2 conducts and D1 is reverse-biased: It is only when a diode is conducting that it is transparent to RF and in this way, we can alternately select either the left or the right element.

When using the antenna:

A few comments about some inexpensive imported radios and their suitability for use with these types of circuits:

In the next part - to be posted some time in the future - we'll talk about how one might implement what we have learned about the circuits, above, in software.

One method of implementation involves the use of two separate antennas, switched at an audible rate, and connected to a narrowband FM receiver. In its simplest form the antenna switch signal could be produced by anything from a 555 timer to an oscillator made from logic gates to one made using an op-amp: All that is necessary is that the duty cycle of the driving (square) waveform me "near-ish" 50% (+/- 30% is probably ok...) and be of sufficient level to adequately drive the switching diodes on the antenna.

See the diagram below for an explanation of how this system works:

|

| Figure 1: A diagram showing how the "TDOA" system works. Click on the image for a larger version. |

In short, if both antennas - which are typically half-wave dipoles - are exactly the same distance from the signal source, the RF waveform on each of the two antennas will have arrived at exactly the same time. If we electronically switch between the two antennas, nothing will happen because both signals are identical.

If one of the two dipoles in our DF antenna is closer to the transmitter than the other, switching the between the two antennas will cause the receiver to see a "jump" in the RF waveform: Switching from, say, A to B will cause it to jump forward while switching from B to A will cause it to switch backward.

The switching, causing the RF waveform to "jump", is seen by the FM receiver as phase shift in the received signal - and being an FM receiver, it detects this as a "glitch" in the audio as depicted in Figure 2:

|

| Figure 2: Example of the "glitches" seen on the audio of a receiver connected to a TDOA system that switches antennas. |

Because the discontinuity in the RF waveform caused by the antenna switch is abrupt, the "glitch" in the audio waveform is transient and occurring only when the antenna is switched. You might notice something else in Figure 2 as well: If we assume that a the first glitch is from switching from antenna "A" to antenna "B" and that it causes the glitch to be positive-going, the switch from antenna "B" back to antenna "A" is going to be negative-going. Ideally, the glitches are going to be equal and opposite but sometimes - such as the case in Figure 2, they are not exactly the same and this is usually due to multipath distortion at the DF antenna array.

At this point, several things may have already occurred to you:

- If switching from antenna "A" to antenna "B" causes a positive glitch and vice-versa, we know that the antenna array is not broadside to the transmitter.

- If we rotate the antenna so that switching from antenna "B" to antenna "A" now causes a positive glitch and from "B" to "A" causes a negative one, we can reasonably assume that if antenna "A" were closer to the transmitter before we rotated it, that the direction of the transmitter is somewhere in between the two antenna positions.

- If the two antennas are equidistant from the transmitter, the glitches will go away entirely. At this point, the antenna will be oriented directly broadside to the transmitter, indicating its bearing.

- The magnitude of the glitches provides some indication of the error in pointing: If the antennas are equidistant with the two-element array perfectly broadside to the transmitter, the amplitude of the glitches will be pretty much nonexistent, but if the antenna is 90 degrees off (e.g. with the boom "pointed" at the transmitter as one would a normal Yagi antenna) the glitches - and the audible tone - will be at the highest possible amplitude.

If the antennas are switched at an audible rate, these glitches will be clearly audible as a tone superimposed on the received signal. While it is not possible to determine the phase relationships of these glitches by ear to determine whether the transmitter is to the left or right of our signal, by sweeping it back and forth and mentally noting where the "null"(e.g. the point at which the tone disappears) is, we can infer the direction of the transmitter by doing so - and that is exactly how the simplest of these TDOA systems work.

For an example of a simple TDOA system using a 555 timer, see the following web page:

WB2HOL 555 Time Difference-of-Arrival RDF - link

This circuit is about as simple as it gets: A 555 timer that generates a square-ish wave. It is up to the user to move the antenna back and forth, note the null and infer from that the direction of the signal.

It should be noted that in this case the transmitter could be behind the user, but with a bit of skill and practice one can resolve this 180 degree ambiguity - particularly if one is fairly close to the transmitter - by noting how the apparent bearing changes with respect to the relative locations of the user and the transmitter.

Detecting the glitches electronically:

To electronically determine if the signal is to the left or right of our position we must be able to determine if the glitch that occurs when switching from on antenna to the other is positive-going or negative-going and to do this, we need some simple circuity and one way to do this is to include a "window" detector - that is, a detector that "looks" at the receive audio for a brief instant, just after the antenna switch occurs and here are two circuits that do just that:

WB2HOL's Simple Time Difference-of-Arrival RDF - link

and

The WA7ARK TDOA units - link (Some of the circuits on this page are described below. )

In looking at the WA7ARK pages, we find two units that indicate left/right in different ways:

- In the "Aural" unit, a 565 chip - which is a PLL (Phase Locked Loop) - is used to both generate the signal for switching the antennas and also to determine if the signal indicated is to the left or right by changing the pitch of the tone.

- In the "Metered" unit described below, an approach is taken very similar to that of the WB2HOL Simple Time Difference-of-Arrival RDF circuit in that a "snapshot" of the audio is taken an the appropriate time to determine the polarity of the glitches and display this as a left/right indication on a meter movement.

Finding the glitches:

At this point in the discussion I would like to redirect your attention back to Figure 2, above. You'll notice that these glitches are really quite brief: They don't last very long at all - and most of the space between glitches is empty - at least if there is no other audio on the transmitted signal.

What about if the signal being received is heavily modulated with voice or noise? That glitch can be easily lost amongst the clutter - but we have advantage: We can know precisely when that glitch is going to occur and look for it only then, ignoring everything else. In selectively looking for that glitch, much of the effect of modulation on that signal that would serve to "dilute" the signal that we want is reduced and this method is used in the "Metered" version of the WA7ARK circuit, reproduced below:

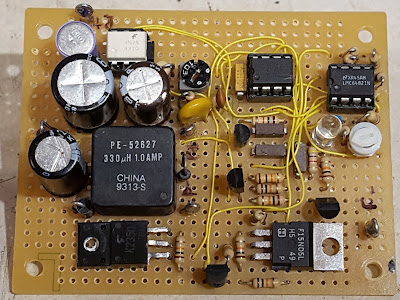

- U3C, an op amp, is wired as an oscillator with the frequency selected as being in the neighborhood of 10 kHz. The precise frequency really isn't critical, but it should be stable: Just make sure that you don't use a ceramic capacitor for C4.

- U2 is a 4017 CMOS divide-by-10 counter. The "Cout" pin has a square wave at 1/10th of the frequency of the U3C oscillator (approximately 1 kHz) and this signal, buffered by U3D, drives the switched antennas.

- The FM receiver is connected via J1 and this contains the audio with the "glitches" in it.

- For every 10 count made by U2, there are two glitches: One occurs when the square wave output from U3D goes from high-to-low, and another when it goes from low-to-high. During that time, the "0-9" outputs of U2 go high, one-at-a-time, representing each of its 10 counts and is high for only 10% of the total time.

- As shown in the diagram, we select two of the 0-9 outputs of U2, 5 counts apart from each other. We pick the output that goes high at the same instant that the "glitch" from the receiver's audio arrives.

- Being driven by U2, U1 contains electronic switches that are activated by the two, brief signals from U2 that we have selected to go high when the glitches arrive. When activated, the appropriate switch inside U1 is closed at a time that coincides with the glitch and this brief signal changes the charges on C2 and C3, the voltage correlating with both the amplitude and polarity of the glitch.

- U3A and U3B buffer the voltages on C2 and C3 and feed it to a zero-centered meter: The more the charges on C2 and C3 differ from each other, the more the meter swings away from the center. Since the voltages on C2 and C3 are derived from the glitches that occur, the meter indication tells us not only whether the signal is to the left or right of us, but also something about how far to the left/right it is!

Because we are looking at only the 20% of the time during which a glitch is coming in, we not only better-reject other audio that might be being transmitted on that signal, but by virtue of some filtering provided by capacitors C2, C3 and resistor R3, we are averaging out the noise and other modulation as well, further improving our effective sensitivity and reducing our susceptibility to effects of noise and modulation on our received signal!

In actual use, one would determine the optimal taps for "X" and "Y" on U2 in the diagram above - either with an oscilloscope, or experimentally by adjusting the taps using a clean tone - no modulation received using an antenna like that described below for the highest meter indication. For calibration, one would simply set the volume on the receiver to cause full-scale deflection when the antenna was pointing "away"(e.g. 90 degrees rotated from the two elements being broadside to the transmitted signal). If necessary, you may make R4 variable, placing a 1k resistor in series with a 10k-25k potentiometer.

The antenna:

Up to this point the antenna has been mentioned only in passing. The simplest antenna - and one that works well for practically any of the simple TDOA systems (of the "left/right" variety) you are likely to find - is depicted below:

While the antenna depicted in Figure 4looks like a 2-element Yagi, it is not. What's more, it is important to realize that while you would line up the elements of a Yagi to point it at the signal being sought, this antenna - when "pointed" toward the transmitter - will have its elements oriented broadside to the transmitter. In other words, if you are facing the transmitter and you are holding the antenna centered in front of you, one of its elements will be to your right and the other will be at the same distance, but on your left.

A few notes about construction:

- The two elements must not be spaced farther than 1/2 wavelength apart at the highest frequency for which you plan to use the antenna. If they are spaced farther than 1/2 wavelength apart, you'll get nonsensical readings! Spacing them about 1/4 wavelength apart on 2 meters (144 MHz) results in a fairly compact and manageable antenna.

- Make sure that the two pieces of coax depicted by "L2" are of the same type and length - an electrical 1/2 wavelength apart: Note that the "velocity factor" of coax will mean that the coax's physical length will be significantly shorter than its electrical length.

- For D1 and D2, use identical diodes. Preferably, a PIN switching diode will work, but a 1N914 or 1N4148 will work in a pinch with somewhat degraded performance. Reportedly, 1N4007 diodes (the 1000 volt version in the 1N400x family of diodes) work fairly well on 2 meters for this purpose.

- For 2 meters, typical values might be:

- L1 = 16 inches (42cm)

- L2 = 13.5 inches (34cm) for cable with a solid polyethylene dielectric.

- L3 = 38 inches (97cm) total consisting of two pieces of half that length.

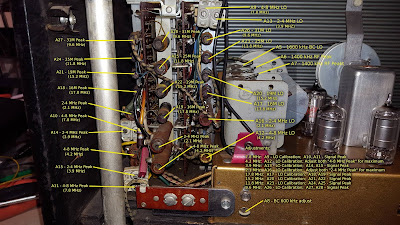

If you look at Figure 3 you will see J2, which is connected to the DF antenna and J3, which is connected to the receiver and separating the two is C7, a 47pF capacitor: C7 is too small to effectively pass our audio-frequency antenna switching signal and is thus able to prevent it from entering the front end of our receiver.

Our switching signal - a square wave - is coupled to the antenna via C6 and this capacitor is large enough that it allows the square wave to pass, but since it is AC coupled, it causes our positive-going square wave from U3D to become bipolar, centered about zero going both positive and negative with respect to ground. R9 is used not only to limit the level of the square wave being fed to the diodes, but it also isolates the RF signal present at J2/C7 from the rest of the circuit.

The now-bipolar square wave travels down the coax to our antenna along with the RF and when it is positive-going, D1 (in Figure 4) conducts and reverse-biases D2, shutting it off, but when it is negative-going, D2 conducts and D1 is reverse-biased: It is only when a diode is conducting that it is transparent to RF and in this way, we can alternately select either the left or the right element.

When using the antenna:

- The above antenna only works well for vertically-polarized signals since the antenna must be held with the elements vertical to get left/right indications.

- Remember that you do NOT use this as you would a Yagi. The tone will disappear when the elements are vertical and the plane of the two elements are broadside to the distant transmitter. In other words, if you are holding the antenna up to your chest, one element will be near your left arm and the other will be near your right.

- If you "point" the boom at the transmitted signal as if it were a normal Yagi, you will get the loudest tone.

- Because this is FM - and with FM, signal strength doesn't matter once the signal is full-quieting - the loudness of the tone will tell you nothing about the strength of the received signal. Again, the loudest tone indicates that the antenna is about 90 degrees off the bearing of the transmitter and the tone disappearing tells you that the antenna is perfectly oriented broadside to the transmitter.

- Remember that if the transmitter is behind you, the left-right indications (if the unit has the capability) will become reversed.

- The presence of multipath and reflections can easily confuse a system like this. Remember to note the trend of the bearings that you are getting rather than relying on a single bearing that might suddenly indicate a wildly different different direction: If you do get vastly different reading, move to a different location and re-check. Unless you are very near the transmitter - which probably means that you can disconnect the antenna cable from the radio and still hear the transmitter - a small change in location should not cause a large change in bearing: If it does, suspect a reflection.

A few comments about some inexpensive imported radios and their suitability for use with these types of circuits:

In recent years there are a number of very inexpensive radios - mostly with Chinese names - that have appeared on the market in the sub-$100 price range - some $50 or below - and the question arises: Are these suitable of direction-finding?

The quick answer is "possibly not."

Many of these radios use an "all-in-one" receiver chip which has several issues:

- These radios tend to overload very easily in the presence of strong signals. If one is very close to the transmitter being sought they can do strange things such as experiencing phase shifts. If one is attempting to use one of these radios with an "Offset Mixer"(a different article...) then it can simply become impossible!

- Many of these radios also have an audio filter that kicks in when the signal is weak and noisy that cannot be disabled. This low-pass filter - which is apparent when the hiss or audio suddenly sounds somewhat muffled - causes a different audio delay. While this will likely have little effect with the simplest TDOA circuit where one is simply listening to a tone, it will likely "break" fancier ones that provide left-right indications.

If you have one of these inexpensive radios and can't seem to make the circuit work, try a different radio - preferably one from one of the mainstream amateur radio brands - during your troubleshooting!

In the next part - to be posted some time in the future - we'll talk about how one might implement what we have learned about the circuits, above, in software.